Posted

Here are a number of features I would like to see in Synfire. I believe most of them are relatively simple.

Send REC trigger to DAW

It would be nice to have a way to send a midi cc or note to trigger audio (midi or any kind) record in a rewire synced DAW. The daw would need a midi learn for the transport, but most of them do.

As it is now, i hit play in synfire, and then rush to hit the REC button in reaper to record audio.

A latency control would be useful to make sure the record happens after the play (so the daw transport is definitely being controlled by REWIRE and not by the record command)

New container default length

CTRL+SHIT+n never seems to give a useful length. I'm longing for a 4 bar default. Perhaps the grid value settings could control the lengths when creating new containers, where 1 is 1 bar and 2, 4, 6, 8, 12 etc would follow suit.

EDIT: I just saw that a loop selection will set the container length when creating a new container. That will work fine.

Record cues from midi notes

It would be great to be able to drop cues by recording midi notes into the time parameter, or drag-dropping a figure.

Display / Edit values in parameter block.

The parameter inspector is for the most part a waste of space. 1/5 of the screen is occupied by one slider most of the time.

I use a two monitor setup, but between the daw, the arrange window, pallets, phrase editors and the harmonizer there is not enough space as it is.

It would be nice to be able to minimize the parameter inspector and display and edit parameters in the parameter block.

---controllers could over lay the value as a meter clicking would adjust or add a point.

--- multiparameter parameters like interpretation could offer little check boxes -- within the current space i could easily work with two rows of boxes to quickly control and display the most important settings, via color coding of the parameter (for voice leading, black=weak, green=adaptive etc)...

--- polyphonic parameters like velocity could split the meters. In the current vertical space of each parameter I think it would be possible to fit 5 meters.

--- harmony could offer something like [g][min][#7][]:: where each part could be dragged up or down to adjust the root, type and then a few empty slots for additions. The "::" would be four check boxes to select which layer is being displayed.

Display ALL harmonic layers

It would be useful if the harmony ruler in the arrange window was able to toggle to display all four layers at once.

CTRL+F find instrument name (partial match)

My synfire template has 130 instrument loaded up and minimized using the "minimize instruments that have no phrases". This works great for me as they take up very little screen space and each container has only the used instruments expanded.

Its quite a scroll to find instruments quickly. A simple find/find next control would make it easy to type in the first few letters of the instrument name or a specific text feature in the name.

Rate control - similar to Reapers.

It would be very useful to have a "rate control that would temporarily scale the tempo independently from the tempo parameter.

This would let use record complex parts at a slower rate without blowing away all of the tempo adjustment at every turn.

Drag Phrases From Library - Left Edge

It would be very useful to be able to drag phrases into instruments with existing phrases. Like chords, the left edge of the phrase could signify where the phrase would begin.

Phrase Editor instrument sheet toggle

It would be very cool if there was a hot key toggle to switch between the usual instrument sheet and a phrase editor for the currently selected instrument, in the the instrument sheet editor of the arrange window.

Rewire Connect

Rewire is never able to reconnect to the DAW.

it takes about 5min to restart and 10 min to load my daw template. If rewire accidentally gets disconnected, I want to open up a new project, or Synfire or the DAW crash.

It would be great and extremely useful if it would be possible to unclick and click the synch button with out worry. This would make it a lot easier to work with mtc synced video as well.

Dynamics Analysis and Control

The most basic aspect of this would be a way to adjust all of the volumes, velocities, breath, volume and/or expression controls for a container -- not to set them to the same value, but to scale them by a value.

This would let us quickly and easily refine the macro structure and dynamic of a piece.

This is a more complex aspect, but it would be very cool if it were possible to route the DAWs audio back through the REWIRE into synfire for basic loudness analysis.

This would let synfire run an automated analysis on instruments to determine the relative effects and curves the different dynamic parameters have on an instrument.

I do this to some degree now, by playing a scale setting velocity, and breath to 127 and setting the volume at 64. I then use T-Racks "loudness" meter to adjust the loudness of each articulation to a consistent range.

My method doesn't capture to any extent, the way different instruments appropriate velocity, breath, volume etc to the overall dynamic. I imagine, past the "loudness" analysis aspect, playing one note in each playing range with varying combinations of dynamic parameters wouldn't be too difficult.

It would be something to run overnight, over many sessions; with options to set analysis resolution (the full 1-127, every second (1,3, 5, 7), every third etc....)

Sat, 2009-10-17 - 20:11 Permalink

here are a few more

Lo op Record

Loop record would be extremely helpful for recording percussion.

Metronome Count In

It seems the metronome doesn't count in when recording into a part that is looped-- which is to say I have to deselect the loop before recording.

Count In Only

I've figured my way around a "metronome in play" by creating a metronome phrase pool in my main library with some different divisions. It would be cool, in this case to have an option to click on the countin but then stop the metronome the moment play starts, so the phrase can take over without doubling.

Rhythmic guides

This is somewhat related to the issue of not being able to see more than a few parts at one time.

It would be cool to be able to select a a figure and run a function that would create 1px vertical colored lines for each symbol that run all up and down the instrument.

This way we could mark the primary and secondary rhythmic ideas in different colors and be able to refer to them visually as we scoll the instrument sheet.

Screen Sets

I was surprised to find that open libraries and pallets were not included in screen sets. To me these are much more necessary than the circle of fifths display or the harmonic context window.

In the 3d side of the software world, many apps use a system of subdividing windows -- take the full window, divide it vertically, select the left half divide it horizontally -- load a different interface element into each pane. Perhaps that could be combined with tabs to allow for multiple libraries, pallets, phrase editors etc.

I'm finding that there just isn't enough space for all the necessary functions. I try to neatly line all the windows up to get the most out of the two monitors (three really, as one display the video to be scored) but I find inevitably they get bumped around; it becomes a mess.

Mon, 2009-10-19 - 08:01 Permalink

choo choo

Remap Input Velocity Range

I've been trying to avoid touching volumes or mixing parameters until the late stages of composition.

it would be very helpful if we could quickly scale the full velocity range of the keyboard input to a specified range. This way we can ensure we stay withing the intended dynamic of a section without having to play with the balance between volume and dynamic expression

this could exist as a very small range slider [--[===]-] somewhere around the record button.

Another way to address this would be to create Dynamic Ranges -- ppp mf fff etc -- in the same mold as playing ranges. These would remap velocity and possibly even cc's as well (CC01 CC02 and CC11 in particular).

Left and Right Arrows for CC data

It would be great if we could use the left and right arrows when editing a span of velocity/bend/cc data to move in greater increments than the up and down arrows.

Loop Issues

Sometimes I find if I try to record while there is a loop selection, the transport will start but no music will play and no note data will be recorded. Deselecting the loop seems to fix it. I'll try to find a repeatable procedure to cause this.

Note Controllers

I was thinking about the discussion earlier about attaching pitch and cc data to notes. In that discussion, I think the hang up ended up being implementing an advanced gui.

It occurred to me that using the default phrase editor will work fine and offer some interesting possibilities that I would be very excited to see implemented.

There is a lot of detail. Bear with me.

For any note, we could open up a phrase editor, that would default to the length of the note.

We draw in any data - mod,pitch,cc,transpose,variation. This data would move with the note. There would be options in the note to determine how it combines with the instrument-parameter data (add, replace, subtract, average etc). This data would always move with the note, and never lose its integrity as notes overlap.

The "note" or note-phrase could also be altered to have any phrase... or no phrase... but more on that later.

These articulations then could be stored and accessed as temporary project Articulations in the same way that Synfire now creates temporary devices for instruments created on the fly. They could also be stored with the instrument for use in all projects.

This would allow us to create complex compositionally specific articulations using many controllers, that can be played easily on the keyboard. It also free us up from worrying about the relationship between the note and cc data as the two are moved independently while still allowing a global cc control by adding, averaging etc note and instrument controllers.

By allowing a single note to attach a phrase we can play multi note sequences quickly on the keyboard. Trills, grace notes etc could be made created with the same playability as sample library articulations. Optionally a phrase could be made as a silent representation of sample data, so one could trigger a whole step trill sample, and have a midi representation of the notes for Synfire to consider in its harmonic analysis with out actually outputting note data of the alternating notes.

Articulations could be created with no notes to allow for shape based cc control. For example: one would create two tracks of an instrument, on one would be the note data; on the other, static notes controlling a variety of no-note-articulations, each effecting the cc data of the note track on an independent rhythmic plane. This could also be used to control audio effects in compositionally complex ways.

Articulations could be automatically generated from an instrument (say, from a recorded performance), storing what ever controller data occurs simultaneously with each note, with a user adjustable offset on either side(to capture the tails or a bit before the note). This would allow use to bake the controller performance into the notes to allow them to be freely adjusted without drawing in the data by hand.

Segments could be selected and turned into articulations automatically, replacing the note with a single symbol with the articulation assigned.

Articulations can be nested... For a multi note articulation, each note can have its own articulation. This, again would let us play complex combinations easily and directly on the keyboard.

Harmony, variation and interpretation parameters at the articulation level could vary the articulation in a way that traditional articulations cannot. Some sort of round robin control could scale/offset the various parameters to add an organic variety to repeated notes.

For all articulations a hot key toggle could display all of the "ghost notes" of the articulation so the actual composition isn't lost in the abstraction of articulations.

If a ghost note were to be edited, we would be given the options to: alter all instances of the articulation, create a new articulation with the alteration, or bake the altered articulation out as segments in the phrase (each with the cc data baked into the symbols) to be edited directly in the composition.

Articulations with multi-note phrases could also be added, automatically to an open library for general use in the composition.

As a final general note about the current articulation setup, it seems like articulations would be better to be assigned to Symbols instead of Segments. As it is, I am constantly breaking apart compositionally logical segments in order to assign the needed articulations. It seems the integrity of the phrase shouldn't be sacrificed for the changing of articulations. It would be especially important in context of this last idea...

Segment tags

This is another one that I mentioned before but it relates to the above.

I would really like to be able to assign a segment to multiple instruments -- by right clicking the note itself.

In an articulation where the note has been replaced with multiple voices (a chord) those voices could be distributed to whatever instruments are assigned to the segment. Which voice gets assigned to which symbol would be assigned in the note-phrase editor.

For the display of segments with multiple instruments assigned. Color coding on the symbol borders could suggest how many instruments are assigned to a note and their type.

Instruments in similar families would have a similar hue. For example, if a violin, viola and cello were assigned to a note the border could repeat a dashed pattern of dark green, medium green and light green. This would be unobtrusive and give a good over view at a glance.

A synth could be added making the border LtGrn-MedGrn-DkGrn-LtBlue -- showing that there a four instruments the majority of them are strings and there is a synth.

Segments assigned to multiple instruments would always show up in all of the assigned instruments phrase editors, but moving the segment in any one, would move all of them. The other properties of the segments, such as playing range etc would be set in the instruments specific phrase editor.

I'd be happy to see the border remain 1px thick and use the colors as such.

Segment tags could also be used to obtain a single phrase editor functionality. A ghost instrument with no midi output could hold the phrase.

Sounding notes would be created by assigning different instruments to the symbols. The ghost instrument would be ignored and only the other instruments would sound. This way we could create multiple ghost instruments with different groupings of instruments where compositional clarity is maintained in passages where notes are quickly passed back and forth between different instruments.

This is not quite as functional as the single phrase editor I described before, but by manipulating the current instrument centric method with ghost instruments, it wouldn't require the rewrite.

Tue, 2009-10-20 - 10:33 Permalink

Wow. That's a lot of creative input. Thanks. I skimmed your posts and found quite a few interesting things. I did not yet get around to reading everything thoroughly and taking notes. I marked this topic for revision and will get back to it asap.

Wed, 2009-10-21 - 02:31 Permalink

I hate to add anymore to the pile, but these seemed like a good one. Not sure how complex though.

Find Part by MIDI in pattern matching.

I find, one of the biggest slow downs, in any sequencer, is navigating to the correct data for editing.

How difficult would it be to adapt synfires musical generalization to pattern matching as a search function?

For instance, lets say I hear a part while playing that needs changing... I stop the transport and hit the search hot key. I then input a fair approximation of the part I want to fix; something rhythmically in the ball park, but not to a click and only with the general melodic shape not specific notes.

Synfire could then bring up a phrase editor with the closest match in the vicinity of the playhead. If its the wrong instrument, the left and right arrows could quickly page through the next closest match.

1. Search Hotkey

2. Midi (dat---dat-dat---dat)

3. Search key

4. Left and right keys if necessary

I think all of that could come off in a few seconds, and would involve us more in the process of listening and working directly with the music, instead of mousing and navigating through software.

Hopefully, the tempo could be extracted from the proportions in the rhythms of the search phrase, so the search can be done on the fly without preparing a click, waiting for a count-in etc...

Thu, 2009-10-29 - 15:40 Permalink

Update: Just read your posts again and added some of the items to our list. Others were there already. Thanks also for identifying a few bugs.

A few comments:

The reason screen sets do not support palettes and libraries is that these are based on external files that might not be available anymore when the arrangement document is moved (or that might have moved themselves). Palettes and libraries would need to be embedded(?). Or some dialog may pop up asking for the files if it can't find them.

The window layout considerations are a permanent topic here. Tabbed, or not tabbed is the question.

Attaching phrases to symbols is an intriguing idea, but definitely way too complex to handle, both for the user and the system. Small controller vectors might work, but not phrases.

I see the point that articulations should attach to both symbols and segments.

Being able to distribute a single segment over multiple instruments seems cool, but it would not work because of the different playing ranges. There is no way to predict the melodic integrity of the segmemt across instruments.

Search by gesture would be doable, but eat up a lot of development resources that would be missing elsewhere. I also doubt such a feature can be reliable and robust enough to be used regularily. Intriguing thought nevertheless.

Andre

Thu, 2009-10-29 - 21:11 Permalink

Update: Just read your posts again and added some of the items to our list. Others were there already. Thanks also for identifying a few bugs.A few comments:

The reason screen sets do not support palettes and libraries is that these are based on external files that might not be available anymore when the arrangement document is moved (or that might have moved themselves). Palettes and libraries would need to be embedded(?). Or some dialog may pop up asking for the files if it can't find them.

Broken reference dialogs seem to be the way most software handles it.

For the 1000 times that I open a project, and want the Library and basic pallete to pop up, I would gladly deal with a once or twice a year broken reference due to a disconnected hardrive. To me its a non issue.

The window layout considerations are a permanent topic here. Tabbed, or not tabbed is the question.

I think tabbed, and with user customizable window divisions would be the best. Synfire needs all the help it could get in this department.

It would take me four monitors to fit in all of the bits I use on a regular basis.

Attaching phrases to symbos is an intriguing idea, but definitely way too complex to handle, both for the user and the system. Small controller vectors might work, but not phrases.

Here is a program, WIVI, that does just that. If its too complex for the system I understand, but why would it be?

(http://wallanderinstruments.com/?menu_item=tour_smart_sequences)

I don't see why it would be too complex for the user.

A hotkey to turn a selection into an articulation and one to dissolve one from a symbol, doesn't sound complex to me at all.

The main goal is to trigger figurations on the keyboard while practicing/noodling so we can experiment with composing complex parts at a alternate timescale... think of the obvious Beethovens 5th.

If it could work with the harmonic context under the play head great. If, for preview purposes, it cant calculate that, static intervals would be fine.

I see the point that articulations should attach to both symbols and segments.Being able to distribute a single segment over multiple instruments seems cool, but it would not work because of the different playing ranges. There is no way to predict the melodic integrity of the segmemt across instruments.

In the post i suggested that once they were assigned, the playing ranges etc of the individually assigned instruments could be set in their respective phrase editors... Im not so sure this approach is perfect. Especially since the first thing I would probably do is try to bump the intervals of the different instruments up and down while maintaining the same beat, to quickly harmonize and arrage a melody.

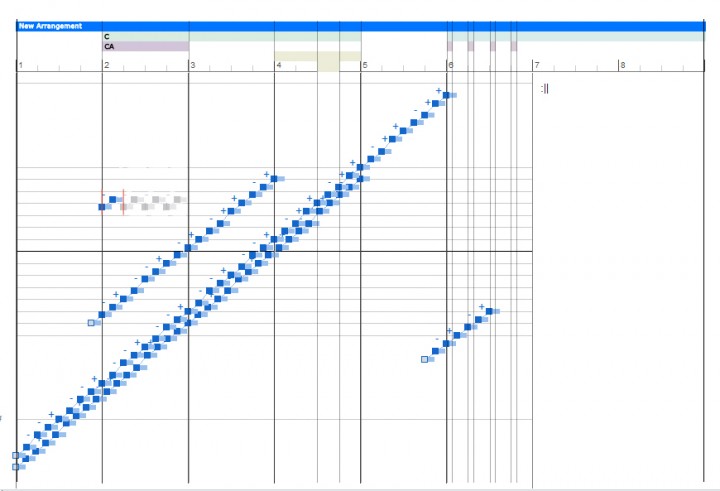

This issue is the Achilles heel of this program. Synfire is all about speed at the cost of precision. Many things are faster than a traditional sequencer, but I am always fighting the system to achieve specific goals. Often spending a great deal of time adjusting system variables.

-- before synfire this is how I edited in cubase... Sonar, seen here, has an even better implementation; switching instruments within the editor is a breeze.

The example above, which I've posted before shows, how a well designed editor can work.

Not only is it vastly faster, but it helps to separate composition from arrangement. I do think it could be improved beyond what is featured in the video, especially for the creation of complex parts with many instruments.

I have reattached an old sample image i made a while back that demonstrates the problem to some degree.

Assume that the long run is assigned in small chunks to different groups of instruments -- maybe three notes at a time ...

[oboe, flute, bassoon, xylophone] all assigned to three notes

[violin1, violin2, viola, synth] to two notes

[tuba, bass trombone, bass clarinet, trombone 1, trombone 2, trombone 3, trumpet 1, trumpet 2, trumpet 3, piccolo trumpet] --- to a 5 notes sequence

Perhaps the assignments are staggered and not assigned in perfect groups as such.

The entire visual integrity of the simple compositional idea will be destroyed, split up and pushed completely off the screen.

The only way to deal with it is too open 30 phrase editors and lose your mind alt tabbing, or lose your mind scrolling up and down the instrument sheet trying to remember if the beat was the "and of 3" or 4, with no visual reference as to where the beats fall in the rest of the arrangement.

My template has about 180 instruments in the instrument sheet, minimized. This is largely due to the fact the instruments are available across the entire arrangement not just in containers and adding them as they are needed in an orchestral setup, as well as arranging them in a sensible order as they are added, would be an absolute time drain.

I almost never see more than one part in the instrument sheet, just lots of unused minimized instruments, so as a visual overview of the score its useless. It is a very fast way to reliably find and select the next instrument to record to.

I like the instrument sheet. Motu has a similar setup and it works very well for large instrumental setups...

The biggest roadblock of displaying lots of parts in a phrase editor seems to be all octaves sharing s single center line.

Perhaps the best solution would be to adapt the dashed colored border idea so that as Symbols overlap, not only is the overlap noted but the instruments themselves can be discerned by a subtle, discreet, color coding on the border as described before--or at least their instrument family discerned.

hotkey+clicking a color a single color on the border would let us quickly edit only a single instrument (with the others grayed out behind it)

[the gray in the reference image was something different, how to represent phrase looping if containers were treated more as flags than black boxes to disrupt the flow of composition]

(t_synfireeditor_181.jpg)

Fri, 2009-10-30 - 17:41 Permalink

[quote]I think tabbed, and with user customizable window divisions would be the best. Synfire needs all the help it could get in this department.

I would like to keep the convenience of dragging between arrangements/libraries etc. Does that require non-tabbed?

Fri, 2009-10-30 - 22:53 Permalink

I thought about the articulation bit some more.

Assuming that it is technically feasible, I suppose the problem could be split into two solutions.

1) plain controller data olny attached to symbols with the functionality described before.

2) a system to temporarily assign a phrase articulation to the MIDI input keyboard, which could be played live but would be recorded as a regular take (if three notes are played and the articulation phrase is three notes, 9 notes will be recorded.)

This could be further extended by making variation a live case, so that the input articulation phrase could be played flipped inverted etc with a live midi control... Other candidates could be strum phrases on STRING chord voicing (upstrokes, downstrokes, patterns defined by the user)

To some degree this simplifies these features by handling them on the input. The system doesn't need any knowledge of how they were handled/created it just records their output.

This separation neglects articulations where the note data and cc data are tied up in a compositionally complex unit... That is where having cc data and phrase data attached as an articulation would be best.

as a side thought

Record To Parameter Feedback

It would be handy to have a audible feedback for recording and rehearsing controller recordings where the controller is not the native cc..

For instance if I want to use my breath controller CC 2 to record to the parameter mod, I can, but I cant hear what Im doing, which makes it far less useful.

It would be necessary to have it function during record to parameter, but also while auditioning/noodling

Mon, 2009-11-02 - 02:47 Permalink

Non Diatonic Highlighting for Take and Static

It would be very useful to have notes outside of the current harmonic context highlighted for both takes and static sybols.

A very small red dot would do the trick.

This would make it easy to see at a glance where key changes need to be made in a recorded take and which notes are likely being misinterpreted withing the current harmonic context.

Humanization

This one would be a bit more complex.

I was thinking of a system where controller channels could be analyzed for transients (like a transient detector for audio loops) which could be then used as origin points for time shifting / humanizing notable points in a cc controller performance.

If the analysis of cc data is an issue, even placing markers by hand, over the original cc take would work.

Once in place, a part could be copied over to several parts. A new humanize parameter could control the amount of time randomizing and scaling as well a smoothing... All set and controlled by one general parameter.

With this setup, we could easily create a global humanize parameter in a container and quickly control how tight an ensemble is playing.

Wed, 2009-11-04 - 06:14 Permalink

Harmonizer Weighting

It would be very useful to have a weighting parameter for parts in the harmonizer.

I have a melody, a vocal line, with a fair bit of ornamentation that was captured in the midi mockup of it. I have one other line as counterpoint to guide the basic harmony.

I would like to be able to let synfire weigh the counterpoint line and more or less ignore the chromatic alterations of the vocal line that happen here and there, by setting its weight low.

This could also be used to force a foreign key and harmony onto a melody by harmonizing it to a contrasting figure by a certain weight.

If weight is too complex, how about a "primary" check box